Google has released Gemini 3, and the results are nothing short of remarkable. With both a Pro version and Deep Think reasoning variant, Gemini 3 has established itself as the new benchmark leader, outperforming competitors from OpenAI and Anthropic across nearly every metric.

Unprecedented Benchmark Performance

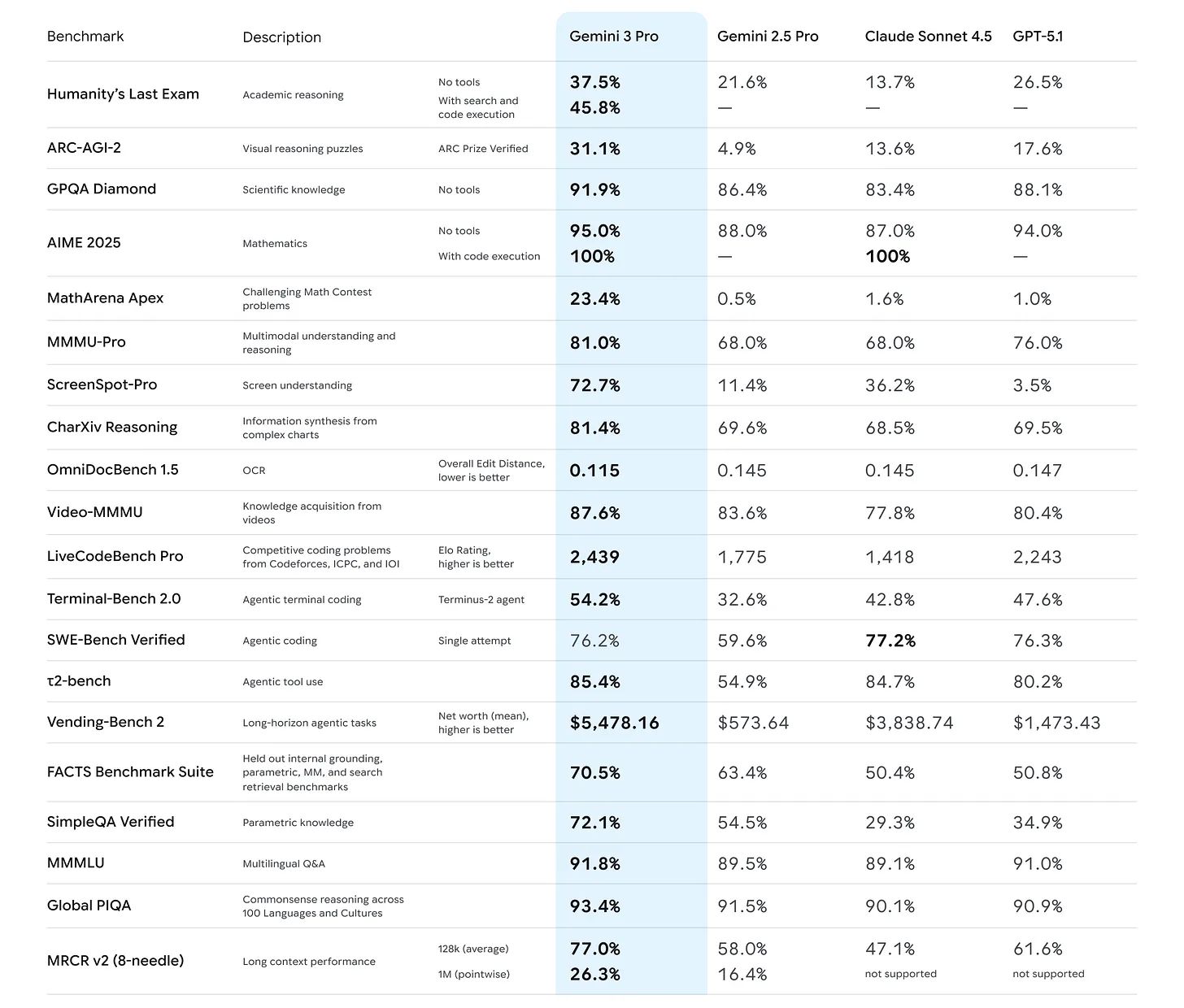

Gemini 3 Pro achieved top scores on 19 of 20 benchmarks tested against leading competitors including GPT-5.1 and Claude Sonnet 4.5. This isn't just incremental improvement—it's a comprehensive demonstration of technical superiority across the board.

Key Performance Highlights

Humanity's Last Exam: Gemini 3 achieved an 11 percentage-point improvement, reaching 37.5% compared to GPT-5.1's 26.5%. This benchmark tests advanced reasoning capabilities that push AI systems to their limits.

Vending-Bench 2: In practical economic scenarios, Gemini 3 generated approximately $5,500 in profit compared to Claude Sonnet 4.5's $3,800—a substantial 45% advantage in real-world decision-making tasks.

SimpleQA Verified: The model demonstrated a ~40% gap between Gemini 3 Pro and the competition on factuality measures, showcasing superior accuracy in providing verifiable information.

Artificial Analysis Intelligence Index: Gemini 3 achieved the largest lead gap seen in considerable time, scoring three points above GPT-5.1—a significant margin in this comprehensive evaluation framework.

The ARC-AGI 2 Breakthrough

Perhaps the most striking achievement is Gemini 3's performance on ARC-AGI 2, a benchmark specifically designed to measure fluid intelligence rather than mere pattern memorization:

- Gemini 3 Pro: 31.1% accuracy

- Gemini 3 Deep Think: 45.1% accuracy

- GPT-5.1 Thinking: 17.6% accuracy

This represents a 2-3x performance advantage over OpenAI's flagship reasoning model. ARC-AGI 2 tests the kind of abstract reasoning and pattern recognition that many consider a prerequisite for artificial general intelligence, making this gap particularly significant.

The Deep Think variant's 45.1% score suggests that Google has made substantial progress in building models that can reason through novel problems without relying on memorized patterns from training data.

Technical Architecture

Gemini 3 represents a ground-up architectural achievement:

- Native multimodal design: Built from scratch rather than retrofitted onto previous models

- Sparse mixture-of-experts architecture: Efficiently activates only relevant model components for each task

- Massive context windows: 1 million token input capacity with 64K output

- Custom hardware training: Trained exclusively on Google's TPU infrastructure

- Enhanced capabilities: Autonomous software task execution, complex multi-step workflows, and superior understanding of handwritten and visual content across multiple languages

Strategic Implications

The comprehensiveness of Gemini 3's advantages is particularly noteworthy. As analyst Alberto Romero points out, this isn't a case of excelling in one area while compromising others. Google has achieved improvements distributed across all model components—reasoning, factuality, multimodal understanding, and practical task execution.

This release demonstrates that Google maintains leadership across multiple AI development fronts simultaneously. The company's investment in custom hardware (TPUs) and native multimodal architecture appears to be paying dividends in model capability.

What This Means for Developers

For developers and organizations choosing AI infrastructure, Gemini 3 presents a compelling option:

Strengths:

- Best-in-class performance across diverse tasks

- Superior reasoning capabilities for complex problems

- Massive context windows for handling large codebases and documents

- Strong multimodal understanding for working with images, handwriting, and visual content

Considerations:

- Higher API pricing compared to some alternatives

- Ecosystem maturity compared to more established competitors

- Integration complexity depending on existing toolchains

The Competitive Landscape

This release intensifies competition in the frontier AI model space. While OpenAI and Anthropic have made significant advances with their recent releases, Google has demonstrated that the race for AI capability leadership remains wide open.

The particularly strong showing on ARC-AGI 2 suggests that progress toward more general AI capabilities continues to accelerate. The gap between Gemini 3 Deep Think and GPT-5.1 Thinking on this benchmark indicates that different architectural approaches and training methodologies can yield substantially different results on tests of fluid intelligence.

Looking Forward

Gemini 3's benchmark dominance represents more than just competitive positioning—it demonstrates continued rapid progress in AI capabilities. The model's strong performance on reasoning tasks, factuality measures, and practical applications suggests we're seeing genuine advances in AI capability rather than mere optimization of existing approaches.

As the AI landscape continues to evolve at breakneck speed, one thing is clear: the competition between frontier labs is driving unprecedented progress. For those building AI-powered applications, the expanding capabilities of models like Gemini 3 open up new possibilities that seemed out of reach just months ago.

The question now isn't whether AI models can handle complex tasks—it's how quickly we can adapt our workflows and applications to leverage these rapidly expanding capabilities.

Jason Cochran

Sofware Engineer | Cloud Consultant | Founder at Strataga

27 years of experience building enterprise software for oil & gas operators and startups. Specializing in SCADA systems, field data solutions, and AI-powered rapid development. Based in Midland, TX serving the Permian Basin.